Helen Angear is leading the PhD project, supported by postgraduate student Beth Mills. Technical support and advice for the project is supplied by the Digital Humanities team at the University of Exeter. The Digital Team offer training and support in TEI encoding, and Helen and Beth have been transcribing and marking up a selection of letters in the programme <oXygen/>.

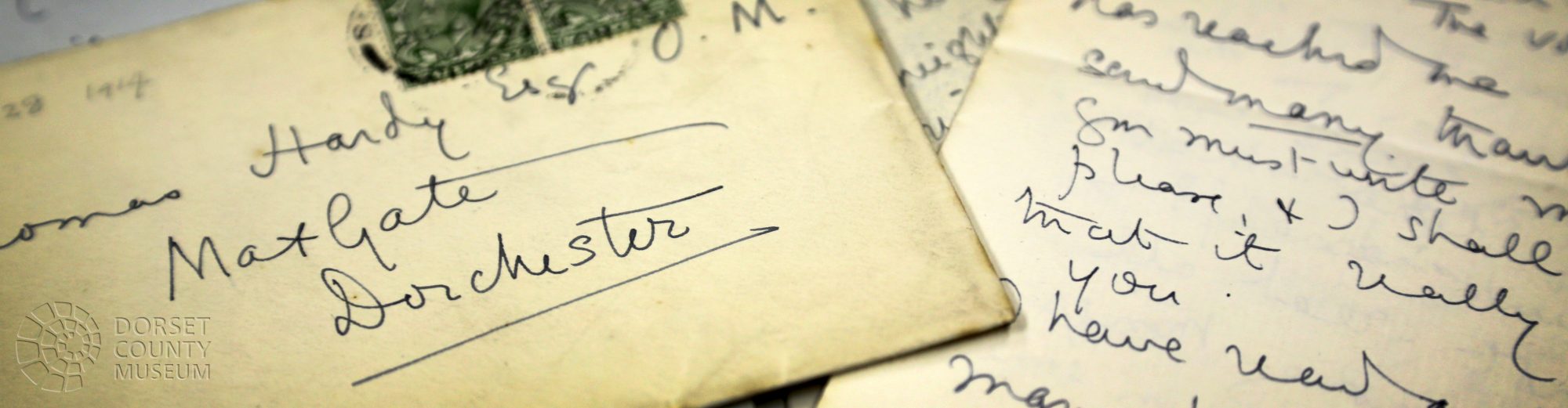

Letters are being photographed using a Phase One camera system and conservation copy cradle, located near to the Heritage Collections store, as our semi-permanent digitising station. This captures high-quality images in extremely fine resolution, making them ideal for the study of every detail of the original manuscripts.

Planning and metadata

Before we started work on the letters, we needed to do some planning to work out how much time it would take us to digitise the amount of letters we had to work with, and to work out the logistics of transporting them from Dorset County Museum (DCM). The letters had two different sets of library marks applied to them: one from DCM (referred to as an H number) and one from the Weber system (a catalogue of the letters that had been compiled in the ’60s, but which was now out-of-date, as new letters had come to light). We had to work out how these library marks corresponded to each other, so that we could match them up.

We then had to work out what metadata we needed to store about the letters as they were processed, such as their condition, their H number, their Weber reference number if any, their measurements, and any other miscellaneous notes. We needed this to be comprehensive for each letter while we had access to it, because we borrowed the letters in batches from the museum and we gave each batch back when we got the next one, so if we missed out on measuring one letter, we couldn’t go back and measure it again. We also wanted to be as efficient as we could be so that we handled the letters as little as possible, therefore causing as little damage to them as possible.

We set up a spreadsheet at first, and later a database, to record all of the necessary information about each text as it was processed and photographed. We needed to keep a record of which photograph matched with which letter and which page for future reference, otherwise when we came back to it, we would not be able to identify the manuscript.

We worked together to create a workflow for digitising the texts, so that PhD student Helen had a process to work through each time she photographed a text. It’s important to have a standard workflow for each item so that you know you have done all of the necessary steps for each before moving on. We kept note of the original camera settings so that the camera was always the same distance away from the letters, and with the same settings, so that each image would be consistent.

Working on the texts

Helen is in charge of the hard work of digitising the texts. As the scale of the project became clear as it went on, she is being assisted by postgraduate student Beth, and undergraduate student volunteers. There are more letters than we originally estimated, and digitising is a slow process as you have to be avoid damaging the materials when handling them. All of the digitisation is done at our copystation in the Old Library. Handling the letters carefully with clean hands, each letter is carefully removed from its file, with notes made about its H number, its condition, its measurements etc. Each side of the leaf is positioned in the centre of the lens, then photographed, with a note made in the spreadsheet about which page number is which. Once finished with one letter, it is carefully returned to its storage, and the process starts again with the next one.

Working with the results

When we exported the images, we used the Capture One software to preserve the right colour balance, measured against a colour control card included in each photograph. We exported them as lossless TIFF files, and then used batch processing to automatically crop them so that they focussed on the letter only, and left none of the space around the outside.

Some of the letters presented a problem as they did not work with the auto-cropping process we used. The process identifies the lightest and darkest parts of the image (the letter and the copystand background), and cropps out the darkest. However, some of the letters had a black border printed directly on them, so interfered with the automation process. A workaround had to be devised specifically for these letters. Another set of letters that presented a problem were two-page spreads, where the auto-cropping process could not initially identify two different pages of the letter. Again, we could accommodate for this by adjusting the original batch process.

Having saved the H number of the letter in our database alongside the image number, it meant that we could auto-generate a filename based on the H number and the page of the letter, making each letter image much easier to identify when working with the whole collection.

Text encoding

Once some of the texts were digitised, we could start marking them up so that we could make them available online with selected features of the original manuscript, and make the text searchable. The Text Encoding Initiative, or the TEI for short, is a standard encoding method for representing texts in digital form, especially in the humanities, social sciences and linguistics. There is a TEI Special Interest Group for Correspondence, which exists to discuss the development of tagsets specifically related to correspondence, for example how to mark up the envelope and postal address, so we have the opportunity to get actively involved in this and hope that our experiences can shape future developments of the TEI.

Once these letters are marked up, their content will be searchable, making them a far more useful and rich research resource for users than ‘flat’ images. They will also provide a useful transcribed resource that does not lose the complexity of the original.